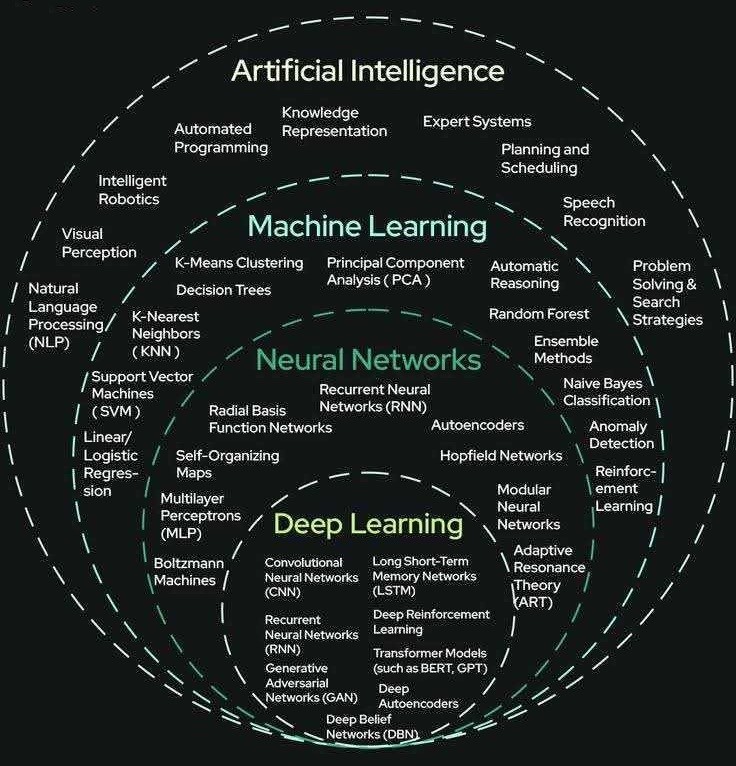

AI

The term

artificial intelligence

was initiated in 1956 by John McCarthy at the Dartmouth

Workshop.

The workshop established AI as a formal field of study, bringing together

experts in neural networks, computation theory,

and automata theory to explore machine

simulation of human intelligence.

AI

The term

artificial intelligence

was initiated in 1956 by John McCarthy at the Dartmouth

Workshop.

The workshop established AI as a formal field of study, bringing together

experts in neural networks, computation theory,

and automata theory to explore machine

simulation of human intelligence.

Its history traces back to

ancient formal reasoning and

Alan Turing's work

on machine intelligence.

Early AI development started during 1960s

by programs like Logic Theorist and General Problem Solver

that mimicked human problem-solving, and also

after the development of the first AI programming language,

LISP. Early AI research focused on rule-based systems, with a surge in

funding and more complex system development occurring in the 1970s and 1980s.

Quantum machine learning (QML),

is the study of quantum algorithms which solve

machine learning tasks.Read more about Quantum AI ↷

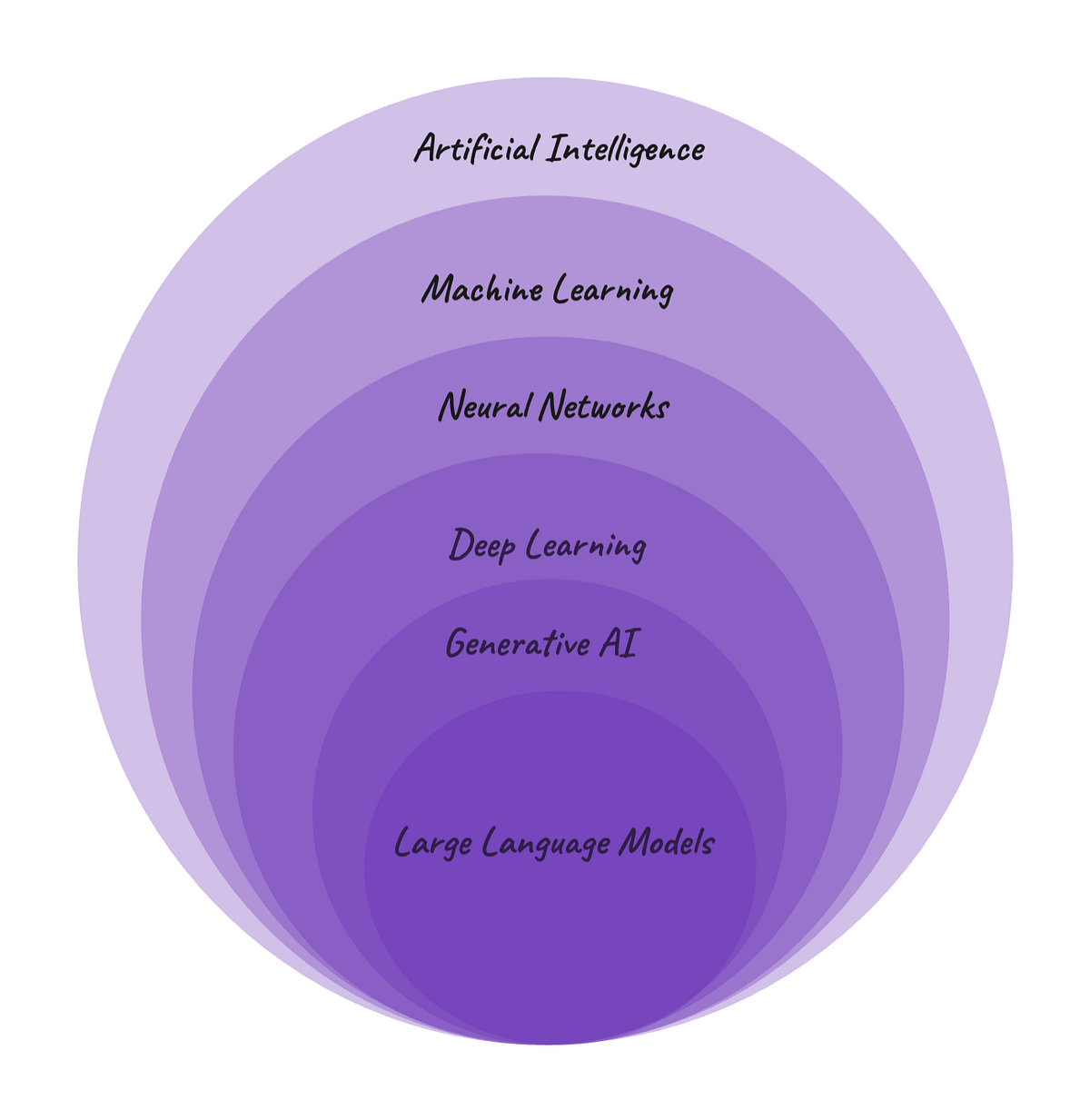

Modern AI (as in

picture and as mentioned in this tutorial) field has continued to evolve with

new techniques,

and the conversation around AI

capabilities has been reignited by recent advancements like large language models,

says Britannica.

AI concepts revolve around creating machines that can simulate human

intelligence through

learning, problem-solving, and decision-making.

It relies on algorithms and vast datasets to recognize patterns, understand language, and

make predictions.

Artificial Intelligence ⇧

Artificial intelligence (AI -

video) is the

simulation of human intelligence in machines,

enabling them to perform tasks like learning, reasoning,

problem-solving, and

perception.

AI systems use techniques such as machine learning and deep learning

to

analyze data, recognize patterns, and adapt their behavior to achieve goals. This

technology has widespread applications, including automating customer support, enhancing

medical diagnoses, and improving financial fraud detection etc.

AI systems use techniques such as machine learning and deep learning

to

analyze data, recognize patterns, and adapt their behavior to achieve goals. This

technology has widespread applications, including automating customer support, enhancing

medical diagnoses, and improving financial fraud detection etc.

- How

Artificial intelligence Works

Learning from Data: Instead of relying on explicit programming for every situation, AI systems learn from vast amounts of data.

Algorithms: Algorithms, including machine learning and deep learning, process this data to identify patterns, make predictions, and improve performance over time.

Perception and Action: AI systems can perceive their environment through sensors or data inputs, process this information, and then take actions or make decisions to achieve a specific goal.

- Key Capabilities

Problem-Solving and Reasoning: AI can solve complex problems and make informed decisions in unpredictable situations.

Learning and Adaptation: AI systems can improve their performance and adapt their behavior based on new experiences and data sets.

Perception: This includes the ability to "see" (image recognition), "hear" (voice understanding), and process other sensory information.

Language Understanding: AI enables machines to understand and translate both spoken and written language.

- Common

Applications

Customer Service: Automating support with virtual assistants and personalized recommendations.

Retail: Offering personalized shopping experiences, managing inventory, and optimizing store layouts.

Manufacturing: Analyzing factory data to forecast demand and optimize production.

Finance:Identifying fraudulent transactions, performing credit scoring, and managing data.

Healthcare: Acting as personal health coaches and assisting with diagnostics and treatment.

- Benefits and Challenges

Benefits: It can improve efficiency, accuracy, and decision-making across industries, freeing humans from tedious tasks and unlocking valuable insights from data.

Challenges: Concerns exist regarding ethical considerations, including potential biases in algorithms, misuse of AI for malicious content, job displacement, and data privacy.

ML - Machine Learning ⇧

Machine

learning (ML -

video)

is a type of artificial intelligence that allows computer systems

to learn and improve from data without being explicitly programmed for every task. It

uses statistical algorithms to analyze large datasets, identify patterns, and then

make

predictions or decisions about new, unseen data.

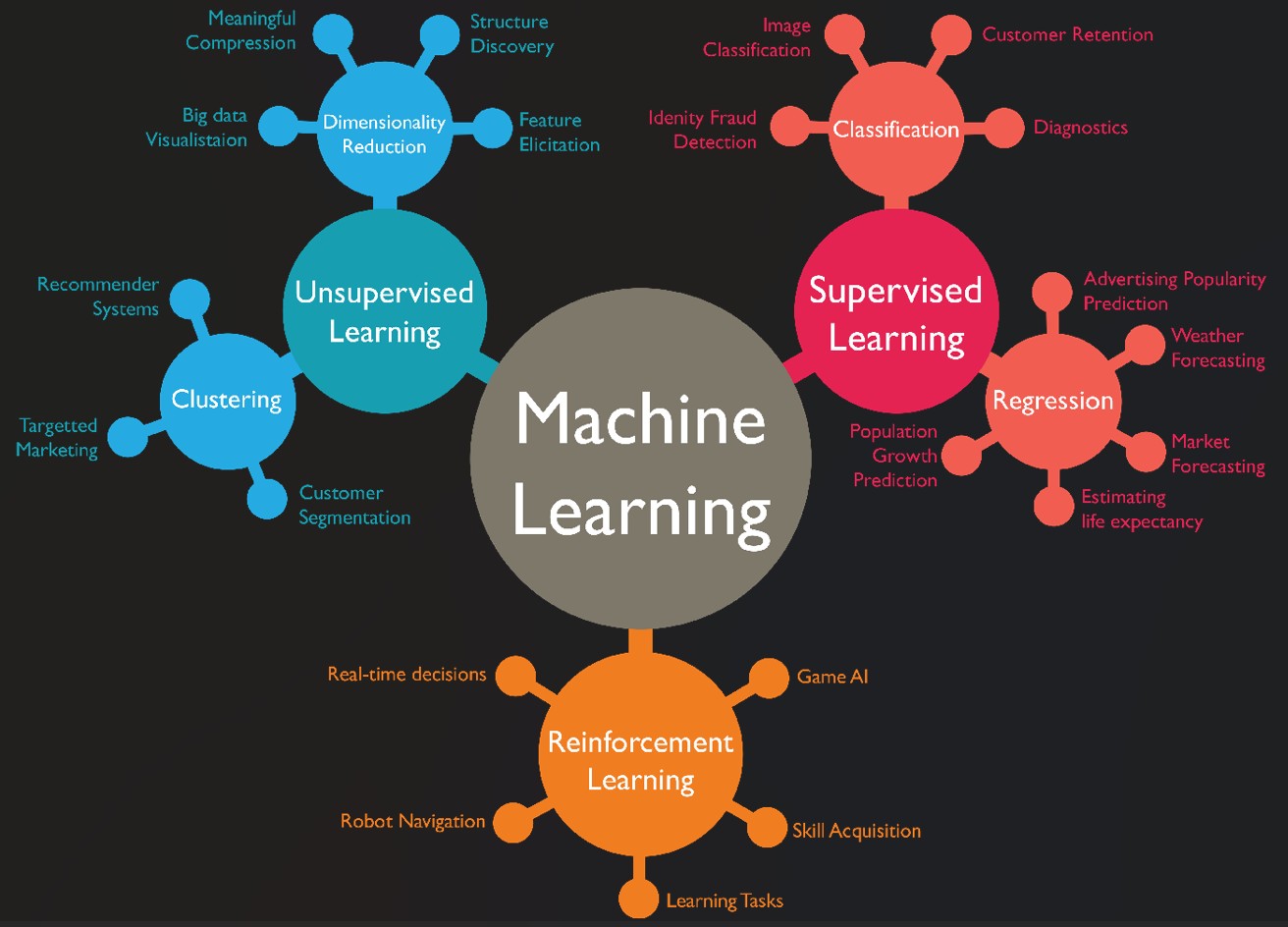

Common types of ML include

supervised

learning (using labeled data), unsupervised learning (finding patterns in unlabeled

data), and reinforcement learning.

to learn and improve from data without being explicitly programmed for every task. It

uses statistical algorithms to analyze large datasets, identify patterns, and then

make

predictions or decisions about new, unseen data.

Common types of ML include

supervised

learning (using labeled data), unsupervised learning (finding patterns in unlabeled

data), and reinforcement learning.

- How

Machine Learning Works

Data: ML systems are fed large amounts of data.

Algorithms: Algorithms analyze this data to find patterns and correlations.

Model Training: The algorithms "train" a model by learning these patterns, much like humans learn from experience.

Predictions/Decisions: The trained model can then be used to make predictions or decisions on new, unseen data.

Improvement: The more data the model processes, the better it becomes at its task.

- Types of Machine Learning,

2

Supervised Learning: The algorithm is trained on labeled data, where the "correct" output is known, allowing it to make predictions for similar new data (e.g., classifying emails as spam or not spam).

Unsupervised Learning: The algorithm works with unlabeled data to discover hidden patterns, groupings, or structures on its own (e.g., grouping customers with similar buying habits).

Reinforcement Learning: The algorithm learns by trial and error, receiving "rewards" for correct actions and "penalties" for incorrect ones, much like a person learns a new skill through practice

- Real-World Applications

Recommendation Engines: Suggesting movies, music, or products based on your past choices (e.g., Netflix, Spotify).

Fraud Detection: Identifying and flagging fraudulent transactions in finance.

Healthcare: Accelerating research, improving diagnostics, and personalizing treatments.

Personal Assistants: Powering virtual assistants and large language models.

Autonomous Vehicles: Enabling cars to "learn" to drive.

NN - Neural Networks ⇧

Neural networks

(NN -

video)

are a type of machine learning model inspired by the human brain that

learn by identifying patterns in data through interconnected layers of "neurons"

(artificial

neurons - perceptrons).

By

adjusting the strength of connections (weights) between these

artificial neurons during

a process called backpropagation, the network learns to make predictions or

classifications, making it useful for tasks like image recognition, natural language

processing, and complex data analysis.

Neural networks

(NN -

video)

are a type of machine learning model inspired by the human brain that

learn by identifying patterns in data through interconnected layers of "neurons"

(artificial

neurons - perceptrons).

By

adjusting the strength of connections (weights) between these

artificial neurons during

a process called backpropagation, the network learns to make predictions or

classifications, making it useful for tasks like image recognition, natural language

processing, and complex data analysis.

- How Neural Networks Work

Neurons: At the core of a neural network are artificial neurons, or nodes, that receive inputs.

Connections & Weights: Each neuron is connected to others by weighted connections, which represent the strength of the connection.

Layers: Neurons are organized into layers: an input layer that receives data, one or more hidden layers where processing occurs, and an output layer that produces the final result.

Processing: An input signal travels through the network, with each neuron processing its input and passing an output to the next layer.

Training (Learning): During training, the network's weights are adjusted to minimize the difference between its predicted output and the actual desired output for a given task. This is done using an algorithm called backpropagation.

- Key Features

Pattern Recognition: They excel at finding complex patterns and correlations in raw data that might be missed by simpler algorithms.

Adaptability: Neural networks can adapt and continuously improve their performance over time.

Non-linear Processing: They are powerful because they can capture complex, non-linear relationships in data.

- Real World Applications

Image Recognition: Identifying objects and features in images.

Natural Language Processing (NLP): Understanding and generating human language.

Machine Translation: Translating text from one language to another.

Prediction: Forecasting future trends or outcomes based on historical data.

DL - Deep Learning ⇧

Deep Learning

(DL -

video)

is a branch of machine learning that uses multilayered artificial neural

networks to learn patterns from large amounts of data, similar

to how a human brain

processes information. These complex networks can then be used for tasks like

image and

speech recognition, language translation, and making recommendations, automating

processes that would typically require human intelligence.

Deep Learning

(DL -

video)

is a branch of machine learning that uses multilayered artificial neural

networks to learn patterns from large amounts of data, similar

to how a human brain

processes information. These complex networks can then be used for tasks like

image and

speech recognition, language translation, and making recommendations, automating

processes that would typically require human intelligence.

- How it

works

Neural Networks: Deep learning models use artificial neural networks, which are inspired by the structure and function of the human brain.

Layers: These networks consist of multiple layers—an input layer, several hidden layers, and an output layer.

Learning from Data: Data enters the input layer, is processed through the hidden layers, and results in a prediction or decision from the output layer. The more layers a network has, the "deeper" it is and the more complex patterns it can learn.

Pattern Recognition: Instead of relying on predefined rules, deep learning systems learn to recognize features and relationships directly from raw, often unstructured, data.

- Key characteristics of Deep Learning

Autonomous: Deep learning models can learn and improve their performance through experience with data without human intervention.

Unsupervised Learning: Many deep learning models can work with unlabeled data, extracting relevant features and characteristics on their own.

Complex Problem-Solving: The multilayered architecture allows deep learning to handle complex problems that traditional machine learning models struggle with.

- Real World Applications

Image Recognition: Identifying objects, faces, and scenes in images.

Speech Recognition: Transcribing spoken words into text and enabling voice-controlled devices.

Natural Language Processing: Translating languages, analyzing sentiment, and generating human-like text.

Recommender Systems: Suggesting products or content based on user behavior, such as on Netflix or Amazon.

Robotics: Training robots to perform complex tasks like navigation and manipulation.

More Deep Learning Resources: Deep Learning, Powering the Future of Intelligent Systems

GenAI - Generative Artificial Intelligence ⇧

Generative AI

(GenAI -

video)

is a type of artificial intelligence that creates new content,

such as

text, images, music, and videos,

by learning patterns from vast datasets and generating

novel, human-like outputs in response to prompts. Unlike traditional AI, which

analyzes

or categorizes data, generative AI produces original material by understanding the

underlying structure of the data it's trained on to predict the next logical element in

a sequence.

Generative AI

(GenAI -

video)

is a type of artificial intelligence that creates new content,

such as

text, images, music, and videos,

by learning patterns from vast datasets and generating

novel, human-like outputs in response to prompts. Unlike traditional AI, which

analyzes

or categorizes data, generative AI produces original material by understanding the

underlying structure of the data it's trained on to predict the next logical element in

a sequence.

- How

Generative AI Works

Training: Generative AI models are trained on immense datasets of existing content, like millions of images or large volumes of text.

Pattern Recognition: During training, the model learns the patterns, structures, and relationships within this data.

Content Generation: When given a prompt, the model uses these learned patterns to generate new, original content that mimics the style and characteristics of its training data

- Key characteristics of Gen AI

Content Creation: Its primary function is to generate new content rather than just analyze existing information.

Creativity: It uses algorithms to mimic human creativity by producing unique material.

Prompt-Driven: It responds to user inputs or "prompts" to create specific content, such as generating an image of a person who doesn't exist from a textual description.

- Common Types of Generative AI

Large Language Models (LLMs): Used for generating text-based content, including conversations, stories, and code.

Image Generators: Create new images from textual descriptions.

Audio and Video Tools: Generate new music, sound effects, or video clips

- Use Cases

Content Creation: Assisting with writing marketing copy, creating background music, or generating script ideas.

Design: Generating product designs or creating realistic images of non-existent people.

Software Development: Generating code or assisting with code review.

LLM - Large Language Models ⇧

Large

Language Models

(LLMs -

video)

are a form of artificial intelligence that process and

generate human-like text by learning from vast amounts of data. They power generative

AI, excelling at tasks like text generation, translation, summarization, and

answering

questions by understanding complex language patterns using transformer

architectures.

LLMs achieve their capabilities through deep learning on massive datasets and interact

with users via natural language prompts, though they face challenges related

to cost,

data bias, and potential misinformation.

Large

Language Models

(LLMs -

video)

are a form of artificial intelligence that process and

generate human-like text by learning from vast amounts of data. They power generative

AI, excelling at tasks like text generation, translation, summarization, and

answering

questions by understanding complex language patterns using transformer

architectures.

LLMs achieve their capabilities through deep learning on massive datasets and interact

with users via natural language prompts, though they face challenges related

to cost,

data bias, and potential misinformation.

LangChain

is a framework

for developing applications powered by large language models (LLMs).

- How

Generative LLM Works

Vast Data Training: LLMs are trained on colossal datasets, including books and articles, to learn grammar, facts, and reasoning skills.

Transformer Architecture: The underlying architecture of LLMs is often a transformer model, which uses self-attention mechanisms to focus on important parts of the input text and understand the relationships between words.

Text Prediction: At their core, LLMs work by predicting the next most likely word in a sequence, which enables them to generate coherent and relevant text.

- Key Capabilities

Natural Language Understanding: LLMs can grasp the complexities and nuances of human language.

Content Creation: They generate text, write code, create summaries, and can even produce other forms of content like images.

Information Retrieval: They provide answers to questions and can be used for tasks like sentiment analysis.

- Real World Applications

Content & Marketing: Used for generating pitches and relevant sales content.

Customer Service: Powers customer contact centers by building conversational agents and providing real-time assistance to human agents.

Code Generation: Assisting developers by writing new code.

Translation: Translating text from one language to another.

- Challenges and Considerations

Cost & Resources: Training LLMs requires significant computational power and can be very expensive.

Bias and Misinformation: Since they are trained on existing data, LLMs can reflect biases present in that data and risk generating inaccurate or misleading information (hallucinations).

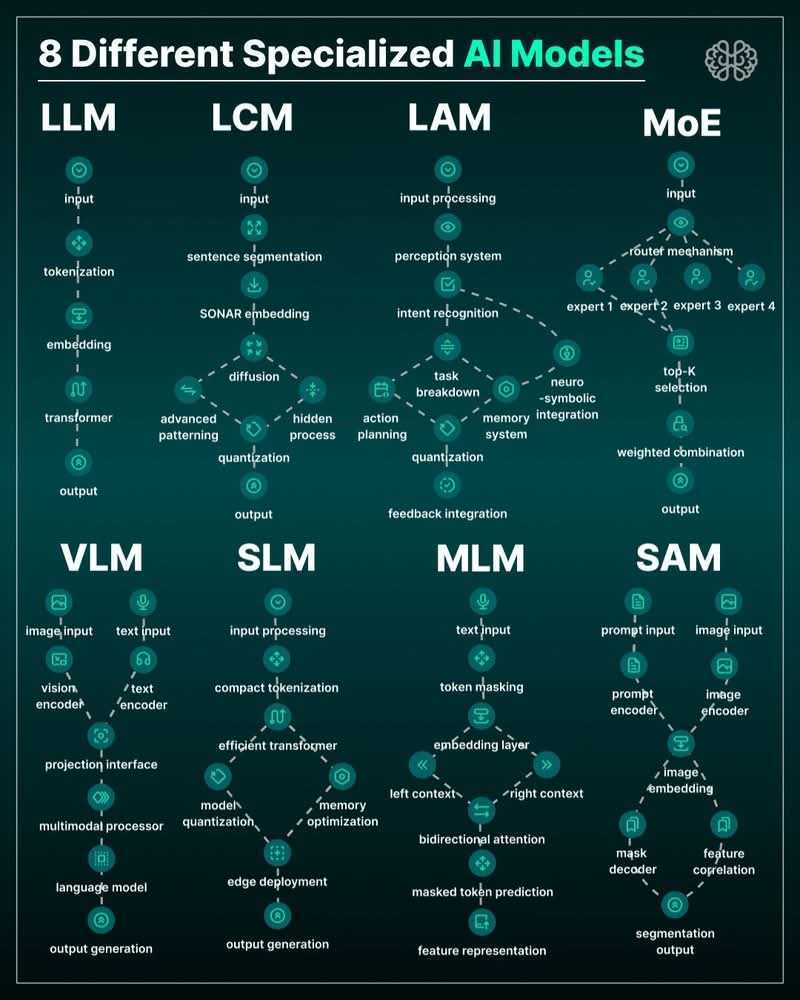

Prominent specialized AI model types: 8 specialized AI model types, Powering the Future of Intelligent Systems, LCM, LAm, MoE, VLM, SLM, MLM, and SAM etc.

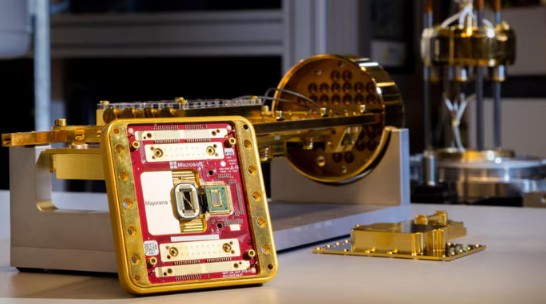

- Quantum AI - QML

is the integration of quantum computing and artificial intelligence that uses quantum principles like superposition and entanglement to run AI models. By leveraging the immense processing power of quantum computers, it aims to enhance AI capabilities, allowing them to solve complex problems much faster than traditional computers. This fusion holds the potential to transform fields such as machine learning, data analysis, and scientific discover. Read also a nice article about: Quantization in LLM

Quantum AI:

Cloud-based quantum computing,

Azure Quantum,

Microsoft

Quantum,

Microsoft Quantum CPU: The Heart of a New Era,

Tensorflow

Quantum,

dwavequantum.com,

google-cirq,

aws-quantum-ai,

ibm-quantum,

What is

quantum

computing?,

Azure Quantum -

Majorana

The AI boom ⇧

AI vs. Generative AI

AI vs. Generative AI

Traditional AI: is a broad field for machines to perform human-like tasks -

analyzing data to make predictions, classifications, or

decisions.

Generative AI: is a specialized subset of AI that creates novel content,

such as text, images, and code, by learning patterns from vast datasets.

The development of AI and GenAI applications

involves using deep learning models, like transformers and GANs, to

process large amounts of data, enabling them to generate contextually appropriate

outputs in response to prompts. GenAI has transformative potential across industries by

automating tasks, enhancing creativity, and improving efficiency in areas like software

development and content creation.

Outlook for the future

Generative AI is developing rapidly and its application potential is constantly

expanding.

In the future, we could see even more diverse and sophisticated AI applications that

could change the way we create and consume content.

AI will play an increasingly important role in a wide range of fields, and its

importance will continue to grow.

In the future, AI could revolutionise many sectors.

In medicine, for example, it can help develop new medicines and treatments by analysing

large amounts of data and simulating their effects.

In education and research, AI can help students and researchers create new knowledge and

make connections between different disciplines.

The AI boom

is an ongoing period of

technological progress in the field of

artificial intelligence (AI) that started in the late 2010s before gaining international

prominence in the 2020s. Examples include generative AI technologies, such as large

language models and AI image generators by companies like

OpenAI, as well as scientific

advances, such as protein folding prediction led by Google DeepMind.

As of 2025, ChatGPT is the 5th

most visited website globally behind Google, YouTube,

Facebook, and Instagram.

Future of AI and GenAI Applications: The future of AI, 11 Key Predictions, The 2025 AI Index Report, The Future of AI in Business, AI 2027

Generative AI and Vibe Coding tools

Generative AI

tools have become more common since the

AI boom

in the 2020s.

GenAI tools

create new content, including text,

images, and audio, based on user

prompts. Examples include large language models like ChatGPT, Google Gemini, and

Claude,

for text-based tasks; image generators like Midjourney and Adobe Firefly; and

audio

tools like ElevenLabs. Many platforms also offer integrated AI features , such as

Microsoft Copilot, Google Cloud's Gemini, Canva's Magic Design and

IBM Generative AI

etc

Generative AI

tools have become more common since the

AI boom

in the 2020s.

GenAI tools

create new content, including text,

images, and audio, based on user

prompts. Examples include large language models like ChatGPT, Google Gemini, and

Claude,

for text-based tasks; image generators like Midjourney and Adobe Firefly; and

audio

tools like ElevenLabs. Many platforms also offer integrated AI features , such as

Microsoft Copilot, Google Cloud's Gemini, Canva's Magic Design and

IBM Generative AI

etc

What is enterprise AI?

Enterprise

artificial intelligence is an

is the integration of advanced AI-enabled technologies and

techniques within large organizations to enhance business functions.

It encompasses routine tasks such as data collection and analysis, plus more complex

operations such as automation, customer service and risk management.

What is Azure AI Foundry?

Azure AI Foundry

is a unified Azure platform-as-a-service offering for enterprise AI operations,

model builders, and application development.

This foundation combines production-grade infrastructure with friendly

interfaces, enabling developers to focus on building

applications rather than managing infrastructure

Companies developing GenAI include:

OpenAI,

Google DeepMind,

DeepSeek,

Anthropic,

Microsoft,

Meta,

IBM,

Broadcom,

Amazon

Yandex

Baidu

XAI

Major GenAI Tools:

ChatGPT,

DALL-E,

Gemini,

Claude,

Copilot,

Midjourney,

Copy.ai,

Synthesia,

Runway,

DeepSeek,

Baidu Chat,

many others ..

Vibe coding tools:

Vibe

coding is an

AI-assisted

software development technique popularized by Andrej Karpathy in February 2025.

Vibe coding tools are AI-assisted environments that allow users to generate code

by describing their intent in natural language,

rather than writing line-by-line code.

GitHub_Copilot,

Alpha Code,

Gemini,

Ollama,

Mistral

Programming Language Libraries Used to Develop AI Apps ⇧

Languages involved in AI App. Development

In AI application development, the choice of programming language depends on the

specific project requirements, balancing factors like performance, ease of use, and the

availability of specialized libraries. While Python is the most dominant language,

others like C++, Java, R, and Julia

are also widely used.

Machine

Learning Languages,

JS is Good

for ML,

DS with JS,

Node.js AI Libs,

with

C,

AI with

R,

AI with SQL,

AI with Python,

AI with Lisp

others like C++, Java, R, and Julia

are also widely used.

Machine

Learning Languages,

JS is Good

for ML,

DS with JS,

Node.js AI Libs,

with

C,

AI with

R,

AI with SQL,

AI with Python,

AI with Lisp

Java Top 7 Libs. for AI Dev.,

Java Top 10 Libs. for Data Science,

Spring AI Dev.,

Data Science with Kotlin,

Deep Java Library,

Tools for GenAI,

Java ML Libraries

Scientific Computing, Data Science (Data Manipulation) & Analysis, Data Visualization:

Data Science

is used for tasks like data analysis, visualization, reporting,

predictive analysis,

and handling large datasets,

more about

data science.

Data Science

is used for tasks like data analysis, visualization, reporting,

predictive analysis,

and handling large datasets,

more about

data science.

Scientific Computing

The language is a valuable tool for performing complex

mathematical calculations and scientific simulations, see

more tools.

Python is a programming language widely used by Data Scientists.

Python Libraries for Data Science & Data

Visualization:

NumPy,

2,

Pandas,

2,

Dask,

Vaex,

Matplotlib,

Seaborn,

Plotly,

Bokeh,

Altair

Python Libraries for Machine Learning:

Artificial Intelligence (AI) & Machine Learning (ML): Python plays a significant role in

developing AI and ML models, including image recognition and text processing.

Libraries for Machine Learning:

Scikit-Learn,

LightGBM,

CatBoost,

XGBoost,

Statsmodels,

Optuna,

RAPIDS.AI cuDF and cuML,

Statsmodels

Artificial Intelligence (AI) & Machine Learning (ML): Python plays a significant role in

developing AI and ML models, including image recognition and text processing.

Libraries for Machine Learning:

Scikit-Learn,

LightGBM,

CatBoost,

XGBoost,

Statsmodels,

Optuna,

RAPIDS.AI cuDF and cuML,

Statsmodels

Automated Machine Learning (AutoML) Python Libraries:

Automated machine learning (AutoML) is the process of automating the tasks of creating

and implementing machine learning models. It aims to make AI more accessible by

automating the end-to-end development pipeline, from data preprocessing to model tuning

and selection.

Python Libraries:

PyCaret,

H2O,

TPOT,

Auto-sklearn,

FLAML

Automated machine learning (AutoML) is the process of automating the tasks of creating

and implementing machine learning models. It aims to make AI more accessible by

automating the end-to-end development pipeline, from data preprocessing to model tuning

and selection.

Python Libraries:

PyCaret,

H2O,

TPOT,

Auto-sklearn,

FLAML

Deep Learning Python Libraries:

Deep learning is a type of machine learning that uses artificial neural networks with

multiple layers to enable computers to learn from data and perform tasks like image

recognition, speech recognition, and natural language processing.

These are some of the most popular and important Python libraries for deep learning:

Python Libraries:

TensorFlow,

PyTorch,

FastAPI,

Keras,

MXNet,

PyTorch Lightning

Deep learning is a type of machine learning that uses artificial neural networks with

multiple layers to enable computers to learn from data and perform tasks like image

recognition, speech recognition, and natural language processing.

These are some of the most popular and important Python libraries for deep learning:

Python Libraries:

TensorFlow,

PyTorch,

FastAPI,

Keras,

MXNet,

PyTorch Lightning

Python Libraries for Natural Language Processing:

Natural Language Processing (NLP) is a field of artificial intelligence (AI) that

teaches computers to understand, interpret, and generate human language. NLP combines

computational linguistics, machine learning, and deep learning to enable computers to

process both text and spoken language. These are some of the most popular and important

Python libraries for natural language processing:

Python Libraries:

NLTK,

SpaCy,

Gensim,

Hugging Face

Transformers,

Fairseq

Natural Language Processing (NLP) is a field of artificial intelligence (AI) that

teaches computers to understand, interpret, and generate human language. NLP combines

computational linguistics, machine learning, and deep learning to enable computers to

process both text and spoken language. These are some of the most popular and important

Python libraries for natural language processing:

Python Libraries:

NLTK,

SpaCy,

Gensim,

Hugging Face

Transformers,

Fairseq

Real-Time and Edge Computing:

"Faust edge programming" likely refers to using the FAUST (Functional AUdio STream)

programming language for applications that run on embedded or "edge" devices, such as

smartphones, DSPs, or custom hardware.

Python Libraries:

Faust,

TensorFlow Lite

"Faust edge programming" likely refers to using the FAUST (Functional AUdio STream)

programming language for applications that run on embedded or "edge" devices, such as

smartphones, DSPs, or custom hardware.

Python Libraries:

Faust,

TensorFlow Lite

Python Libraries in Data Engineering and ETL

Learn useful functions, ETL techniques, API usage, unit testing, memory monitoring, and

working with SDKs.

Python Libraries:

Apache Airflow,

PySpark,

Other Libraries for Data Science

Learn useful functions, ETL techniques, API usage, unit testing, memory monitoring, and

working with SDKs.

Python Libraries:

Apache Airflow,

PySpark,

Other Libraries for Data Science